Scientists created a psychopathic AI using Reddit images

Look what you've done now, Reddit.

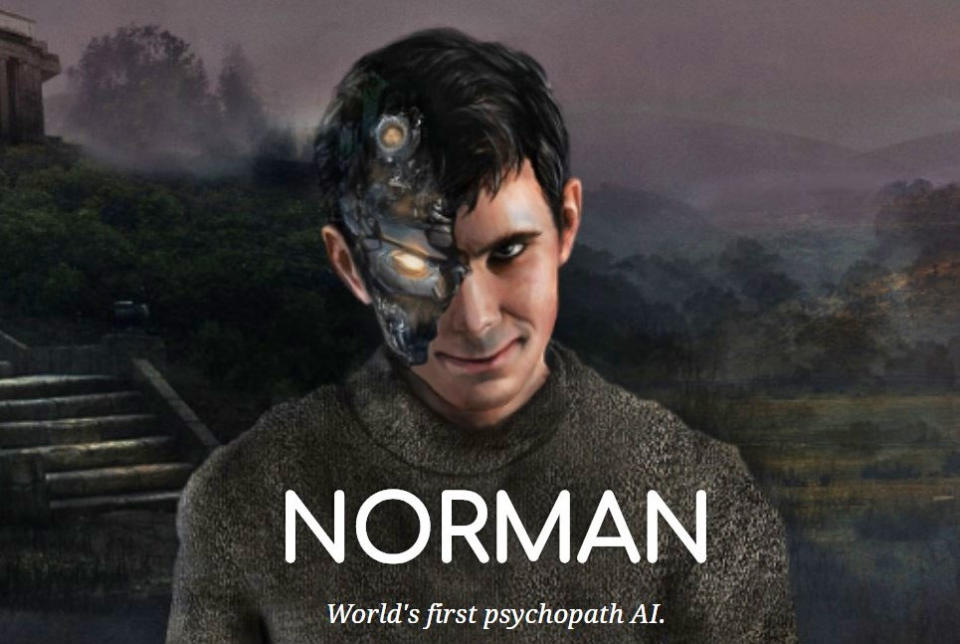

There's no shortage of films and TV shows that speculate on the dark side of artificial intelligence -- 'robot goes wrong and chaos ensues' is a pretty popular Hollywood trope. Now, in a study that sounds like the plot of a movie itself, researchers have actively encouraged an AI algorithm to embrace evil by training it to become a psychopath. A psychopath called Norman.

In the study, scientists from the Massachusetts Institute of Technology (MIT) exposed Norman (named after Anthony Perkins' character in Psycho) to a constant stream of violent and gruesome images from the darkest corners of Reddit, and then presented it with Rorschach ink blot tests. The results were downright chilling.

In one test, a standard AI saw a vase with flowers. Norman saw a man being shot dead. In another, the standard AI reported a person holding an umbrella in the air. Norman saw a man being shot dead in front of his screaming wife. Where the standard AI saw a touching scene of a couple standing together, Norman saw a pregnant woman falling from a building. According to the researchers, having been constantly exposed to negative images and depressive thinking, Norman's empathy logic simply failed to activate.

The disturbing study was designed to prove that machine learning is significantly influenced by its method of input, and that when algorithms are accused of being biased or unfair it's not down to the algorithm but rather the data that's been fed into it. And this can have significant consequences in everything from employment to municipal services. And, as creepy Norman demonstrates, maybe even the future safety of mankind.