DeepLearning

Latest

Google can turn an ordinary PC into a deep learning machine

Time is one of the biggest obstacles to the adoption of deep learning. It can take days to train one of these systems even if you have massive computing power at your disposal -- on more modest hardware, it can take weeks. Google might just fix that. It's releasing an open source tool, Tensor2Tensor, that can quickly train deep learning systems using TensorFlow. In the case of its best training model, you can achieve previously cutting-edge results in one day using a single GPU. In other words, a relatively ordinary PC can achieve results that previously required supercomputer-level machinery.

Monsanto bets on AI to protect crops against disease

Monsanto has drawn plenty of criticism for its technology-driven (and heavily litigious) approach to agriculture, but its latest effort might just hint at the future of farming. It's partnering with Atomwise on the use of AI to quickly discover molecules that can protect crops against disease and pests. Rather than ruling out molecules one at a time, Atomwise will use its deep learning to predict the likelihood that a given molecule will have the desired effect. It's whittling down the candidate list to those molecules that are genuinely promising.

Google wants to speed up image recognition in mobile apps

Cloud-based AI is so last year, because now the major push from companies like chip-designer ARM, Facebook and Apple is to fit deep learning onto your smartphone. Google wants to spread the deep learning to more developers, so it has unveiled a mobile AI vision model called MobileNets. The idea is to enable low-powered image recognition that's already trained, so that developers can add imaging features without using slow, data-hungry and potentially intrusive cloud computing.

Humans can help AI learn games more quickly

Google taught DeepMind to play Atari games all on its own, but letting humans help may be faster, according to researchers from Microsoft and Germany. They invited folks of varying skills to play five Atari 2600 titles: Ms. Pac-Man, Space Invaders, Video Pinball, Q*Bert and Montezuma's Revenge. After watching 45 hours of human gameplay, the algorithm could beat its mentors at pinball, though it struggled at Montezuma's revenge -- just as Deepmind did.

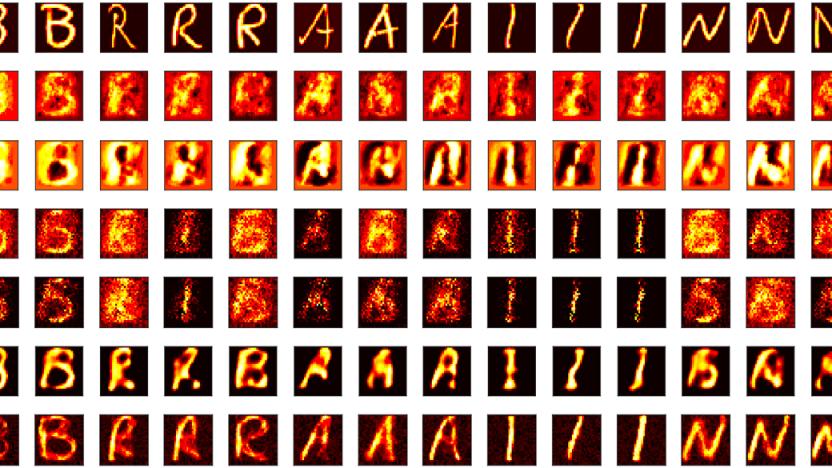

Neural network learns to reproduce what your brain sees

Scientists dream of recreating mental images through brain scans, but current techniques produce results that are... fuzzy, to put it mildly. A trio of Chinese researchers might just solve that. They've developed neural network algorithms that do a much better job of reproducing images taken from functional MRI scans. The team trains its network to recreate images by feeding it the visual cortex scans of someone looking at a picture and asking the network to recreate the original image based on that data. After enough practice, it's off to the races -- the system knows how to correlate voxels (3D pixels) in scans so that it can generate accurate, noise-free images without having to see the original.

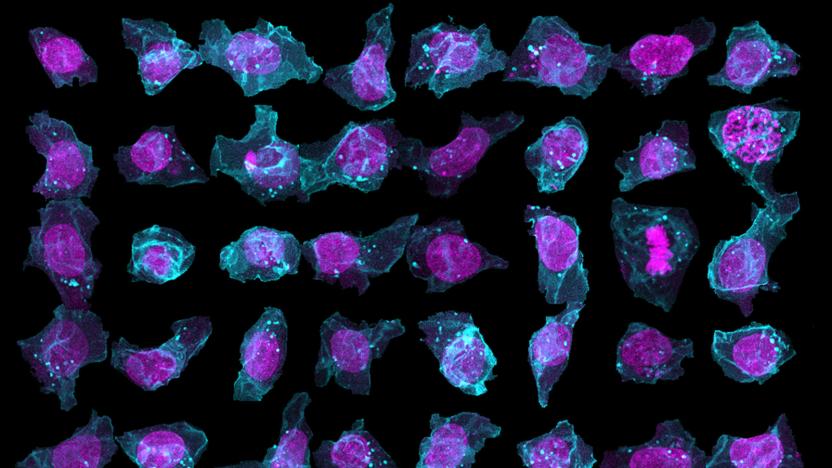

AI predicts the layout of human stem cells

The structures of stem cells can vary wildly, even if they're genetically identical -- and that could be critical to predicting the onset of diseases like cancer. But how do you know what a stem cell will look like until it's already formed? That's where the Allen Institute wants to help: it's launching an online database, the Allen Cell Explorer, where deep learning AI predicts the layout of human stem cells. You only need a pair of identifying structures, like the position of the nucleus, to fill out the rest of the cell's innards.

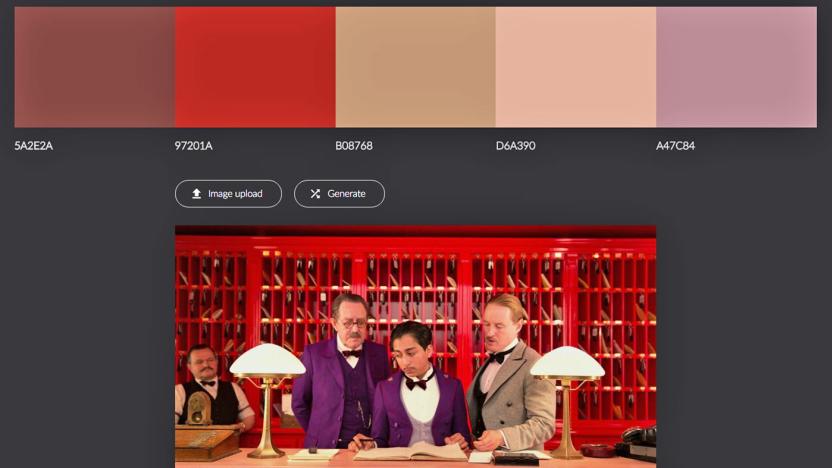

Use AI to turn your favorite film into a color palette

If you're seeking color inspiration from a distinctive-looking film like Grand Budapest Hotel, you could just "eyedrop" it in Photoshop or try an app like Adobe Color CC. Thanks to Vancouver-based developer Jack Qiao, though, there's now a slightly easier way. He came up with Colormind, an AI algorithm that uses films, video games, fashion and art to "generate color suggestions that fit the distinct visual style of those mediums," he says.

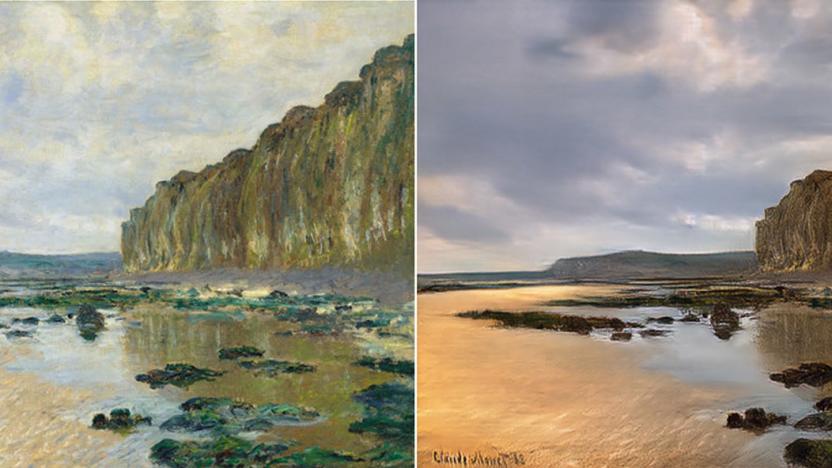

'Reverse Prisma' AI turns Monet paintings into photos

Impressionist art is more about feelings than realism, but have you ever wondered what Monet actually saw when he created pieces like Low Tide at Varengeville (above)? Thanks to researchers from UC Berkeley, you don't need to go to Normandy and wait for the perfect light. Using "image style transfer" they converted his impressionist paintings into a more realistic photo style, the exact opposite of what apps like Prisma do. The team also used the same AI to transform a drab landscape photo into a pastel-inflected painting that Monet himself may have executed.

An AI taught me to be a better tweeter

My name is Daniel Cooper, and I tweet ... a lot. Twitter is an extension of my subconscious, a pressure valve that lets half-baked thoughts escape my mind. In the last seven years, I've tweeted 73,811 times, and yet none of those 140-character messages has made me internet-famous. For all my efforts, I've accrued just 5,635 followers, most of whom are in tech and were probably made to follow me by their boss. It seems that no matter how much I try, I'm never going to become a celebrity tweeter.

AI predicts how athletes will react in certain situations

When you think of sports analysis, you probably think of raw stats like time in the opposing half or shots on goal. However, that doesn't really tell teams how they should have played beyond vague suggestions. Researchers at Disney, Caltech and STATS believe they can do better: they've developed a system that uses deep learning to analyze athletes' decision-making processes. After enough training based on players' past actions, the system's neural networks can predict future moves and create a "ghost" of a player's typical performance. If a team flubbed a play, it could compare the real action against the predictive ghosts of more effective teams to see how players should have acted.

MIT's 'Super Smash Bros.' AI can compete with veteran players

For expert players, most video game AI amounts to little more than target practice -- especially in fighting games, where it rarely accounts for the subtleties of human behavior. At MIT, though, they've developed a Super Smash Bros. Melee AI that should make even seasoned veterans sweat a little. The CSAIL team trained a neural network to fight by handing it the coordinates of game objects, and giving it incentives to play in ways that should secure a win. The result is an AI brawler that has largely learned to fight on its own -- and is good enough to usually prevail over players ranked in the top 100 worldwide.

AI can predict autism through babies' brain scans

Scientists know that the first signs of autism can appear in early childhood, but reliably predicting that at very young ages is difficult. A behavior questionnaire is a crapshoot at 12 months. However, artificial intelligence might just be the key to making an accurate call. University of North Carolina researchers have developed a deep learning algorithm that can predict autism in babies with a relatively high 81 percent accuracy and 88 percent sensitivity. The team trained the algorithm to recognize early hints of autism by feeding it brain scans and asking it to watch for three common factors: the brain's surface area, its volume and the child's gender (as boys are more likely to have autism). In tests, the AI could spot the telltale increase in surface area as early as 6 months, and a matching increase in volume as soon as 12 months -- it wasn't a surprise that most of these babies were formally diagnosed with autism at 2 years old.

Apple reportedly buys an AI-based face recognition startup

Those rumors of Apple exploring facial recognition for sign-ins might just have some merit. Calcalist reports that Apple has acquired RealFace, an Israeli startup that developed deep learning-based face authentication technology. The terms of the deal aren't public, but it's estimated at "several million dollars." Cupertino would mainly be interested in the promise of the technology than pure resources, in other words.

Play a piano duet with Google's AI partner

When Google tries to educate public about its AI research, it often releases tools that playfully explain the grittier, technical corners of artificial intelligence. Like, say, neural network software that looks at objects through your device's camera and spits rhymes about everyday objects. But they also launch fun tools, like AI Duet, an interactive web-based app that accompanies your piano plinking.

AI is learning to speed read

As clever as machine learning is, there's one common problem: you frequently have to train the AI on thousands or even millions of examples to make it effective. What if you don't have weeks to spare? If Gamalon has its way, you could put AI to work almost immediately. The startup has unveiled a new technique, Bayesian Program Synthesis, that promises AI you can train with just a few samples. The approach uses probabilistic code to fill in gaps in its knowledge. If you show it very short and tall chairs, for example, it should figure out that there are many chair sizes in between. And importantly, it can tweak its own models as it goes along -- you don't need constant human oversight in case circumstances change.

Nest Cams can automatically detect your doors

Nest is improving both its apps and its camera smarts. An update to both iOS and Android apps (if your phones and tablets are on the latest versions) focuses on notifications, with Nest Aware subscribers getting the bulk of the benefits. Over the next few weeks, Aware customers will see automatic door detection appear on both their indoor and outdoor Nest Cam feeds. The cameras will attempt to recognize motion patterns over time, feeding the data into deep learning algorithms to make it all automated, automatically creating "activity zones" around doors it picks up. The cameras can then send you notifications when there's movement in that area. You'll also be able to redraw activity zones if your camera detects something different -- or if there's multiple doors.

AI is nearly as good as humans at identifying skin cancer

If you're worried about the possibility of skin cancer, you might not have to depend solely on the keen eye of a dermatologist to spot signs of trouble. Stanford researchers (including tech luminary Sebastian Thrun) have discovered that a deep learning algorithm is about as effective as humans at identifying skin cancer. By training an existing Google image recognition algorithm using over 130,000 photos of skin lesions representing 2,000 diseases, the team made an AI system that could detect both different cancers and benign lesions with uncanny accuracy. In early tests, its performance was "at least" 91 percent as good as a hypothetically flawless system.

AI 'friends' will help you pass the time on autonomous drives

Even more so than last year, CES 2017 was the unofficial auto show for the tech world. Automakers filled the North Hall and the Gold Lot of the Las Vegas Convention Center with self-driving prototypes and concept cars. But instead of talking about the power of Lidar or number-crunching processors, many started focusing on what the hell their passengers will do once they take their hands off the wheel.

Audi and NVIDIA work together on AI-powered cars

NVIDIA isn't content with making an artificial intelligence platform for cars and waiting for someone to use it. The company has unveiled a partnership with Audi that has the two working on AI-powered cars. You'll see the fruits of their labor in an experimental Q7 SUV that has learned to drive itself in three days (it'll be puttering around CES's Gold Lot), but their plans are much bigger. Ultimately, their goal is to have Audis with Level 4 autonomy (that is, full autonomy outside of extreme situations) on roads by 2020. That's only three years away, which is fairly aggressive compared to promises made by other German automakers.

Even smart toothbrushes have AI now

Before the likes of Oral-B started selling Bluetooth-enabled, app-connected toothbrushes, there was Kolibree. The startup developed one of the first smart toothbrushes that incentivized regular brushing and documented oral hygiene habits. We caught the first Kolibree brush at CES several years ago ahead of its successful Kickstarter campaign, and this year the company is back at the tech show with a new model: the Ara. So, what's the latest innovation in smart toothbrushes? AI, of course.