DeepLearning

Latest

Toyota's latest self-driving car is more aware of its surroundings

Toyota barely unveiled its second-generation self-driving testbed half a year ago, but it's already back with an update. The automaker is showing off a Platform 2.1 research vehicle that has made some big technology strides... including some unusual design decisions. The biggest upgrade is an awareness of its surroundings: the modified Lexus is using new lidar from Luminar that not only sees further and maps more data but has a "dynamically configurable" field of view that focuses its attention on the areas they're needed most. There are also new deep learning AI models that are better at spotting objects around the car as well as predicting a safe path.

Intel unveils an AI chip that mimics the human brain

Lots of tech companies including Apple, Google, Microsoft, NVIDIA and Intel itself have created chips for image recognition and other deep-learning chores. However, Intel is taking another tack as well with an experimental chip called "Loihi." Rather than relying on raw computing horsepower, it uses an old-school, as-yet-unproven type of "nueromorphic" tech that's modeled after the human brain.

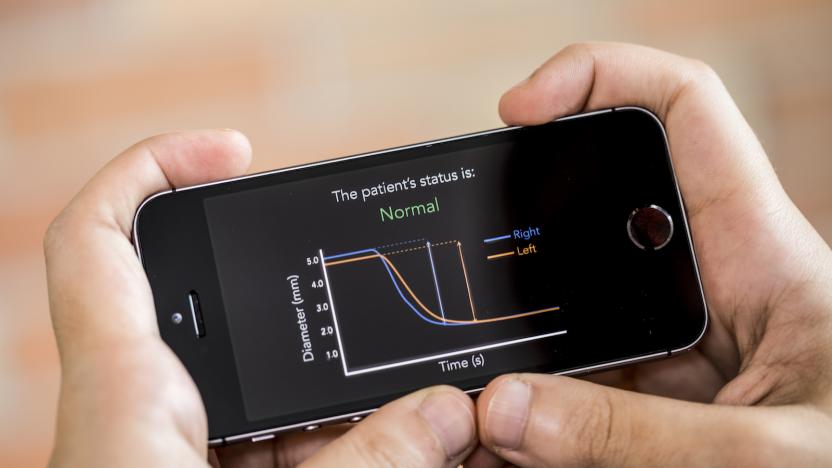

Smartphones could someday assess brain injuries

Researchers at the University of Washington are developing a simple way to assess potential concussions and other brain injuries with just a smartphone. The team has developed an app called PupilScreen that uses video and a smartphone's camera flash to record and calculate how the pupils respond to light.

Microsoft built a hardware platform for real-time AI

In many cases, you want AI to work with info as it happens. That virtual assistant needs to respond within a few seconds at most, and a smart security camera needs to send an alert while intruders are still within sight. Microsoft knows this very well. It just unveiled its own hardware acceleration platform, Project Brainwave, that promises speedy, real-time AI in the cloud. Thanks to Intel's new Stratix 10 field programmable gate array (FPGA) chip, it can crunch a hefty 39.5 teraflops in machine learning tasks with less than 1 millisecond of latency, and without having to batch tasks together. It can handle complex AI tasks as they're received, in other words.

Prisma hopes to market its AI photo filtering tech

Prisma's machine learning photography app may not be as hot as it was in 2016, but that doesn't mean it's going away. If the developer has its way, you'll see its technology in many places before long. The company tells The Verge that it's shifting its focus from just its in-house app to marketing numerous computer vision tools based on its AI technology, ranging from object recognition to face mapping and detecting the foreground in an image. In theory, you'd see Prisma's clever processing find its way into your next phone or a favorite social photography app.

An AI ‘nose’ can remember different scents

Russian researchers are using deep learning neural networks to sniff out potential scent-based threats. The technique is a bit dense (as anything with neural nets tends to be), but the gist is that the electronic "nose" can remember new smells and recognize them after the fact.

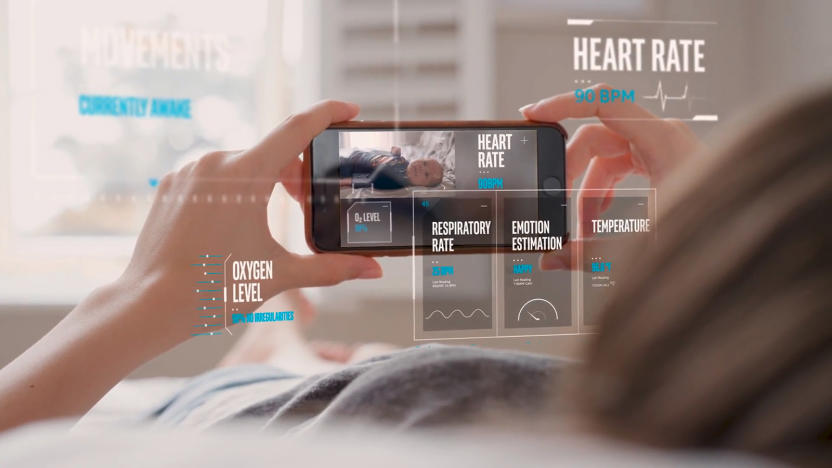

Nanit the AI nanny tries to unravel the mysteries of a restless baby

When my wife and I became parents, the most important weapon in our childcare arsenal was an A5-size notebook. In this mighty tome we wrote out every single data point relating to our new baby, from the quantity of milk she drank and duration of sleep through to the volume of excreta. It was, after all, only with this information that we were able, in our sleep-deprived and confused state, to coordinate how to meet her needs.

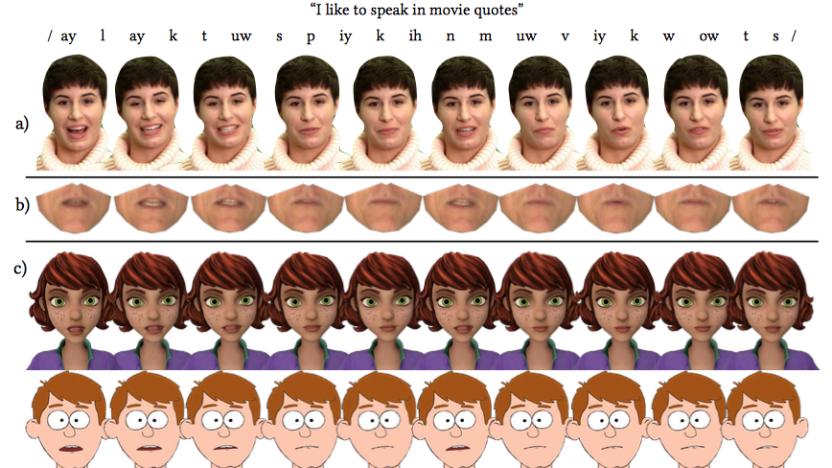

Researchers develop method for real-time speech animation

Researchers at the University of East Anglia, Caltech, Carnegie Mellon University and Disney have created a way to animate speech in real-time. With their method, rather than having skilled animators manually match an animated character's mouth to recorded speech, new dialogue can be incorporated automatically in much less time with a lot less effort.

Intel puts Movidius AI tech on a $79 USB stick

Last year, Movidius announced its Fathom Neural Compute Stick — a USB thumb drive that makes its image-based deep learning capabilities super accessible. But then in September of last year, Intel bought Movidius, delaying the expected winter rollout of Fathom. However, Intel has announced that the deep neural network processing stick is now available and going by its new name, the Movidius Neural Compute Stick. "Designed for product developers, researchers and makers, the Movidius Neural Compute Stick aims to reduce barriers to developing, tuning and deploying AI applications by delivering dedicated high-performance deep-neural network processing in a small form factor," said Intel in a statement.

Google's new AI acquisition aims to fix developing world problems

As part of its continued push into the AI sector, Google has just revealed that it has purchased a new deep learning startup. The Indian-based Halli Labs are the latest addition to Google's Next Billion Users team, joining the world-leading tech company less than two months after the startup's first public appearance. The young company has described its mission statement at Google as "to help get more technology and information into more people's hands around the world." Halli announced the news itself in a brief post on Medium, and Caesar Sengupta, a VP at Google, confirmed the purchase shortly afterwards on Twitter. Welcome @Pankaj and the team at @halli_labs to Google. Looking forward to building some cool stuff together. https://t.co/wiBP1aQxE9 — Caesar Sengupta (@caesars) July 12, 2017

Google's latest venture fund will back AI startups

There's no question that Google believes artificial intelligence is the future, but it doesn't feel like it needs to all the hard work by itself. It's willing to give others a helping hand. To that end, Google has launched a venture capital firm, Gradient Ventures, that will offer financial backing and "technical mentorship" to AI startups. They'll have access to experts from Google itself, including futurist Ray Kurzweil, design mastermind Matias Duarte and X lab leader Astro Teller.

Algorithm spots abnormal heart rhythms with doctor-like accuracy

While not all arrhythmias are fatal or even dangerous, it's still a cause for concern. Some, after all, could cause heart failure and cardiac arrest, and a lot of people with abnormal heart rhythms don't even show symptoms. A team of researchers from Stanford University might have found a way to effectively diagnose the condition even if a person isn't exhibiting symptoms and even without a doctor. They've developed an algorithm that can detect 14 types of arrhythmia -- they also claim that based on their tests, it can perform "better than trained cardiologists."

AI vision can determine why neighborhoods thrive

It's relatively easy to figure out whether or not a neighborhood is doing well at one moment in time. More often than not, you just have to look around. But how do you measure the progress (or deterioration) a neighborhood makes? That's where AI might help. Researchers have built a computer vision system that can determine the rate of improvement or decay in a given urban area. The team taught a machine learning system to compare 1.6 million pairs of photos (each taken several years apart) from Google Street View to look for signs of change on a pixel-by-pixel, object-by-object basis. If there are more green spaces or key building types in the newer shot, for instance, that's a sign that an area is on the up-and-up.

Google's next DeepMind AI research lab opens in Canada

Google's DeepMind artificial intelligence team has been based in the UK ever since it was acquired in 2014. However, it's finally ready to branch out -- just not to the US. DeepMind has announced that its first international research lab is coming to the Canadian prairie city of Edmonton, Alberta later in July. A trio of University of Alberta computer science professors (Richard Sutton, Michael Bowling and Patrick Pilarski) will lead the group, which includes seven more AI veterans. But why not an American outpost?

Samsung needs data before Bixby is ready for English speakers

Wondering why Samsung still hasn't enabled Bixby voice features in English despite promising a launch in the spring? Apparently, it's down to a lack of info. A spokesperson tells the Korea Herald that the company just doesn't have enough "big data" to make its AI-powered voice assistant available in languages besides Korean. It needs that extra knowledge to train Bixby's deep learning system, the Herald says. That's borne out by US beta testing: Samsung says there have been some 'unsatisfactory' responses so far.

GSK will use supercomputers to develop new drugs

Developing a new drug is a long, complicated and expensive process that takes years before you get to the human trial. There's a hope that computers will be able to simulate the majority of the process, greatly reducing the cost and time involved. That's why GlaxoSmithKline is throwing $43 million in the direction of Scottish AI company Exscientia, which promises to use deep learning to find new drugs.

Microsoft made its AI work on a $10 Raspberry Pi

When you're far from a cell tower and need to figure out if that bluebird is Sialia sialis or Sialia mexicana, no cloud server is going to help you. That's why companies are squeezing AI onto portable devices, and Microsoft has just taken that to a new extreme by putting deep learning algorithms onto a Raspberry Pi. The goals is to get AI onto "dumb" devices like sprinklers, medical implants and soil sensors to make them more useful, even if there's no supercomputer or internet connection in sight.

Scientists made an AI that can read minds

Whether it's using AI to help organize a Lego collection or relying on an algorithm to protect our cities, deep learning neural networks seemingly become more impressive and complex each day. Now, however, some scientists are pushing the capabilities of these algorithms to a whole new level - they're trying to use them to read minds.

Sony's unorthodox take on AI is now open source

When it comes to AI, Sony isn't mentioned in the conversation like Google, Amazon and Apple are. However, let's remember that it was on the forefront of deep learning with products like the Aibo robot dog, and has used it recently in the Echo-like Xperia Agent (above) and Xperia Ear. Sony is finally ready to share its AI technology with developers and engineers to incorporate them into their products and services, it has revealed.

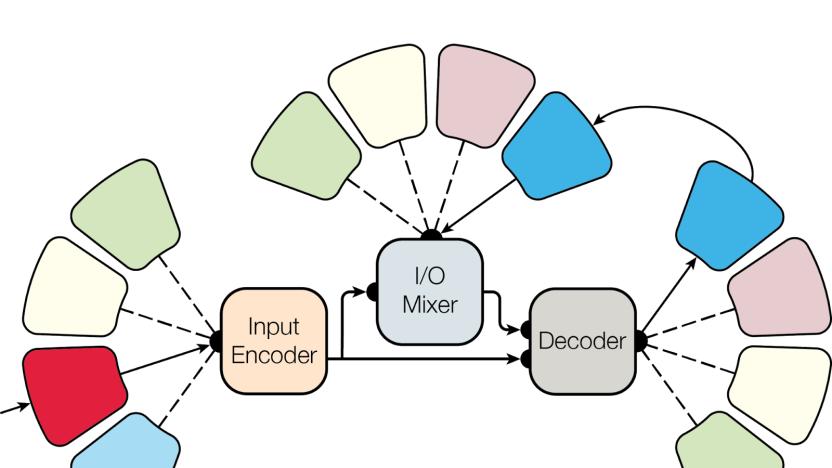

Google's neural network is a multi-tasking pro

Neural networks have been trained to complete a number of different tasks including generating pickup lines, adding animation to video games, and guiding robots to grab objects. But for the most part, these systems are limited to doing one task really well. Trying to train a neural network to do an additional task usually makes it much worse at its first.