MachineLearning

Latest

How computational photography is making your photos better

Phone cameras have undergone huge improvements in recent years, but they've done so without the hardware changing all that much. Sure, lenses and sensors continue to improve, but the big developments have all been in software. So-called computational photography is using algorithms and even machine learning to stitch together multiple photos to yield better results than were previously possible from a tiny lens and sensor. Smartphones are limited by physics. With a small sensor, narrow lens aperture and shallow depth, there are serious challenges in designing an improved phone camera. In particular, these mini cameras suffer from noise -- digital static in the images -- particularly in low light. Combine this with limited dynamic range, and you've got a camera that can perform pretty well in bright daylight, but where image quality starts to suffer as the light dims.

AI learns to solve a Rubik's Cube in 1.2 seconds

Researchers at the University of California, Irvine have created an artificial intelligence system that can solve a Rubik's Cube in an average of 1.2 seconds in about 20 moves. That's two seconds faster than the current human world record of 3.47 seconds, while people who can finish the puzzle quickly usually do so in about 50.

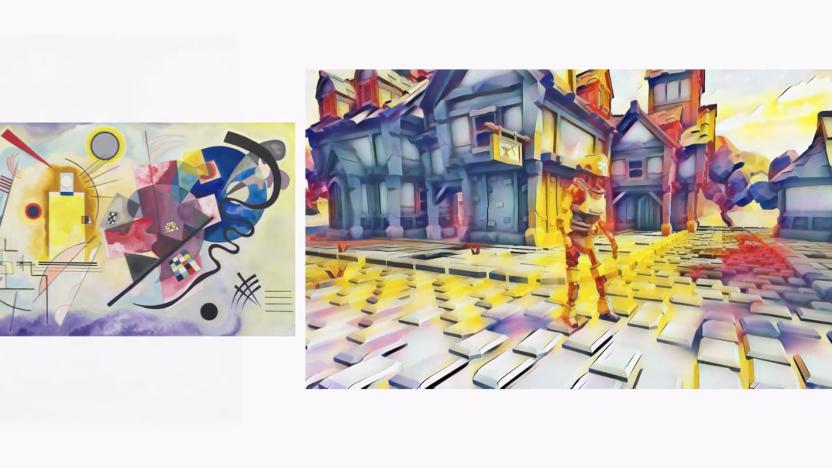

Google Stadia can use AI to change a game's art in real-time

Google's Stadia game streaming service isn't just using the cloud to make games playable anywhere -- it's also using the technology for some clever artistic tricks. A Style Transfer feature uses machine learning to apply art styles to the game world in real time, turning even a drab landscape into a colorful display. If you'd like to play in a realm that resembles Van Gogh's Starry Night, you can.

Fan uses AI to remaster 'Star Trek: Deep Space Nine' in HD

Unfortunately, you're highly unlikely to see an official remaster of Star Trek: Deep Space Nine. Its special effects were shot on video rather than added to film, making an already daunting remastering process that much more difficult -- and since it's not a tentpole show like The Next Generation, CBS might not consider it worth the effort. Machine learning might make it easier for fans to fill the gap, however. CaptRobau has experimented with using AI Gigapixel's neural networks to upscale Deep Space Nine to 1080p. The technology is optimized for (and trained on) photos, but apparently works a treat for video. While you wouldn't mistake it for an official remaster, it provides a considerably cleaner, sharper look than the 480p original without introducing visual artifacts.

Google’s DeepMind can predict wind patterns a day in advance

Wind power has become increasingly popular, but its success is limited by the fact that wind comes and goes as it pleases, making it hard for power grids to count on the renewable energy and less likely to fully embrace it. While we can't control the wind, Google has an idea for the next best thing: using machine learning to predict it.

Verily's algorithm helps prevent eye disease in India

Verily's efforts to spot and prevent eye disease through algorithms are becoming more tangible. The Alphabet-owned company has revealed that its eye disease algorithm is seeing its first real-world use at the Aravind Eye Hospital in Madurai, India. The clinic screens patients by imaging their eyes with a fundus camera (a low-power microscope with an attached cam) and sending the resulting pictures to the algorithm, which screens for diabetic retinopathy and diabetic macular edema. Doctors could prevent the blindness that can come from these conditions by catching telltale signs they'd otherwise miss.

Website uses AI to create infinite fake faces

You might already know that AI can put real faces in implausible scenarios, but it's now clear that it can create faces that otherwise wouldn't exist. Developer Phillip Wang has created a website, ThisPersonDoesNotExist, that uses AI to generate a seemingly infinite variety of fake but plausible-looking faces. His tool uses an NVIDIA-designed generative adversarial network (where algorithms square off against each other to improve the quality of results) to craft faces using a large catalog of photos as training material.

AICAN doesn't need human help to paint like Picasso

Artificial intelligence has exploded onto the art scene over the past few years, with everybody from artists to tech giants experimenting with the new tools that technology provides. While the generative adversarial networks (GANs) that power the likes of Google's BigGAN are capable of creating spectacularly strange images, they require a large degree of human interaction and guidance. Not so with the AICAN system developed by Professor Ahmed Elgammal and his team at Rutgers University's AI & Art Lab. It's a nearly autonomous system trained on 500 years worth of Western artistic aesthetics that produces its own interpretations of these classic styles. And now it's hosting its first solo gallery show in NYC. AICAN stands for "Artificial Intelligence Creative Adversarial Network" and while it utilizes the same adversarial network architecture as GANs, it engages them differently. Adversarial networks operate with two sets of nodes: one set generates images based on the visual training data set that it was provided while the second set judges how closely the generated image resembles the actual images from the training data. AICAN pursues different goals. "On one end, it tries to learn the aesthetics of existing works of art," Elgammal wrote in an October FastCo article. "On the other, it will be penalized if, when creating a work of its own, it too closely emulates an established style." That is, AICAN tries to create unique -- but not too unique -- art. And unlike GANs, AICAN isn't trained on a specific set of visuals -- say chihuahuas, blueberry muffins, or 20th century American Cubists. Instead, AICAN incorporates the aesthetics of western art history as it crawls through databases, absorbing examples of everything -- landscapes, portraits, abstractions, but without any focus on specific genres or subjects. If the piece was made in the Western style between the 15th and 20th centuries, AICAN will eventually analyze it. So far, the system has found more than 100,000 examples. Interestingly this learning method is an offshoot of the lab's earlier research into teaching AI to classify various historical art movements. Elgammal notes that this training style more closely mimics the methodology used by human artists. "An artist has the ability to relate to existing art and... innovate. A great artist is one who really digests art history, digests what happened before in art but generates his own artistic style," he told Engadget. "That is really what we tried to do with AICAN -- how can we look at art history and digest older art movements, learn from those aesthetics but generate things that doesn't exist in these [training] files." It can even name the art that it creates using titles of works it has already learned. To regulate the uniqueness of the generated artworks, Elgammal's team had to first quantify "uniqueness." The team relied on "the most common definition for creativity, which emphasizes the originality of the product, along with its lasting influence," Elgammal wrote in a 2015 article. The team then "showed that the problem quantifying creativity could be reduced to a variant of network centrality problems," the same class of algorithms that Google uses to show you the most relevant results for your search. Testing the quantifying system on more than 1,700 paintings, AICAN generally picked out what are widely considered masterpieces: rating Edvard Munch's The Scream and Picasso's Ladies of Avignon far higher in terms of creativity than their peer works, for example, but panned Da Vinci's Mona Lisa. The pieces that it does produce are stunningly realistic... in that most people can't tell that it wasn't made by a human artist. In 2017, Elgammal's team showed off AICAN's work at the Art Basel show. 75 percent of the attendees mistook the AI's work for a human's. One of the machine's pieces sold later that year for nearly $16,000 at auction. Despite AICAN's critical and financial successes, Elgammal believes that there is still a market for human artists, one that will greatly expand as this technology enables virtually anybody to generate similar pieces. He envisions AICAN as being a "creative partner" rather than a simply artistic tool. "It will unlock the capability for lots of people, so not only artists, it will make more people able to make art," he explained, in much the same way that Instagram's social nature revolutionized photography. He points to the Met Museum in NYC, as an example. A quick Instagram search will turn up not just images of the official collection but the visual interpretations of those works by the museum's visitors as well. "Everybody became an artist in their own way by using the camera," Elgammal said. He expects that to happen with GANs and CANs as well, once the technology becomes more commonplace. Until then, you'll be able to check out AICAN's first solo gallery show, "Faceless Portraits Transcending Time," at the HG Contemporary in New York City. This show will feature two series of images -- one surreal, the other abstract -- generated from Renaissance-era works. "For the abstract portraits, I selected images that were abstracted out of facial features yet grounded enough in familiar figures. I used titles such as portrait of a king and portrait of a queen to reflect generic conventions," Elgammal wrote in a recent post. "For the surrealist collection, I selected images that intrigue the perception and invoke questions about the subject, knowing that the inspiration and aesthetics all are solely coming from portraits and photographs of people, as well as skulls, nothing else." The show runs February 13th through March 5th, 2019. Images: Rutgers University

AI-guided material changes could lead to diamond CPUs

Scientists know that you can dramatically alter a crystalline material's properties by applying a bit of strain to it, but finding the right strain is another matter when there are virtually limitless possibilities. There may a straightforward solution, though: let AI do the heavy lifting. An international team of researchers has devised a way for machine learning to find strains that will achieve the best results. Their neural network algorithm predicts how the direction and degree of strain will affect a key property governing the efficiency of semiconductors, making them far more efficient without requiring educated guesses from humans.

WhatsApp deletes 2 million accounts per month to curb fake news

WhatsApp is trying a number of measures to fight its fake news problem, including study grants, a grievance officer and labels on forwarded messages. However, it's also relying on a comparatively old-fashioned approach: outright deleting accounts. The messaging service has revealed in a white paper that it's deleting 2 million accounts per month. And in many cases, users don't need to complain.

Nuance's AI uses real interactions to make chat bots smarter

Many high profile brands and companies have a customer service chat bot function on their website. Indeed, some research suggests that by 2020 conversational AI will be the main go-to for customer support in large organizations. But as the current technology stands, it's only as effective as the manual programming that's gone into its creation, and relies on the customer asking the right questions or including the right keywords to send the bot down the right branch of script. Today, though, Nuance Communication has announced a new technology that aims to make the conversational intelligence of chat bots a whole lot smarter.

McCormick hands over its spice R&D to IBM's AI

McCormick might be a brand name you recognize from its herbs and spices, French's Classic Yellow Mustard or even "edible" KFC-flavored nail polish. For more than 40 years, it's recorded reams of data on product formulas, customer taste preferences and flavor palettes. Now it's harnessing artificial intelligence in flavor development.

Salvador Dali's AI clone will welcome visitors to his museum

If you're planning a trip to the Dali Museum this spring, you might meet an unexpected guest: namely, Salvador Dali himself. The St. Petersburg, Florida venue has announced that an AI recreation of the artist will grace screens across the museum starting in April. A team from Goodby Silverstein & Partners used footage of Dali to train a machine learning system on how to mimic the artist's face, and superimposed that on an actor with a similar physique. The virtual painter will both draw on Dali's own writings as well as comment on events that happened well after his 1989 death -- it should at once be educational and as surreal as Dali's work.

Facebook backs an independent AI ethics research center

Facebook is just as interested as its peers in fostering ethical AI. The social network has teamed up with the Technical University of Munich to back the formation of an independent AI ethics research center. The plainly titled Institute for Ethics in Artificial Intelligence will wield the university' academic resources to explore issues that "industry alone cannot answer," including those in areas like fairness, privacy, safety and transparency.

AI is better at bluffing than professional gamblers

The act of gambling on games of chance has been around for as long as the games themselves. For as long as there's been money to be made wagering on the uncertain outcomes of these events, bettors have been leveraging mathematics to give them an edge on the house. As gaming has moved from bookies and casinos into the digital realm, gamblers are beginning to use modern computing techniques, especially AI and machine learning (ML), to increase their odds of winning. But that betting blade cuts both ways, as researchers work to design artificial intelligences capable of beating professional players at their own game -- and even out-wagering sportsbooks.

Tablo's newest over-the-air DVR automatically skips ads

If you'd rather just watch Netflix and catch TV over the air for free, while still being able to skip ads, Nuvyyo's Tablo Quad DVR might be for you. It packs a 4-tuner DVR, letting you find, record, store and stream up to four live antenna TV channels at once. More importantly, Tablo has caught up to TiVo's Roamio, letting you skip OTA commercials automatically via a beta feature. It uses a cloud-based system that marries algorithms and machine learning to help you enjoy an ad-free experience without lifting a finger.

IBM fingernail sensor tracks health through your grip

The strength of your grip can frequently be a good indicator of your health, and not just for clearly linked diseases like Parkinson's -- it can gauge your cognitive abilities and even your heart health. To that end, IBM has developed a fingernail sensor that can detect your grip strength and use AI to provide insights. The device uses an array of strain gauges to detect the deformation of your nail as you grab objects, with enough subtlety to detect tasks like opening a pill bottle, turning a key or even writing with your finger.

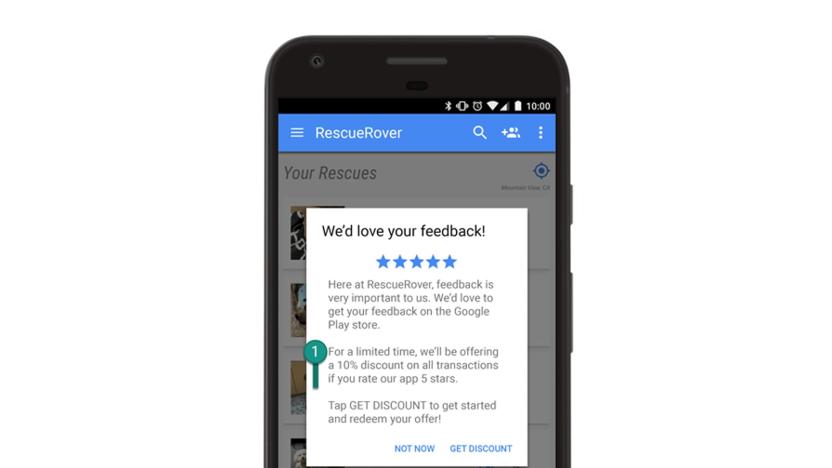

Google pulled 'millions' of junk Play Store ratings in one week

Google is just as frustrated with bogus app reviews as you are, and it's apparently bending over backwards to improve the trustworthiness of the feedback you see. The company instituted a system this year that uses a mix of AI and human oversight to cull junk Play Store reviews and the apps that promote them, and the results are slightly intimidating. In an unspecified recent week, Google removed "millions" of dodgy ratings and reviews, and "thousands" of apps encouraging shady behavior. There are a lot of attempts to game Android app reviews, in other words.

YouTube removed 58 million videos last quarter for violating policies

YouTube has been publishing quarterly reports that detail how many videos it removes for policy violations and in its most recent report, YouTube has also included additional data regarding channel and comment removals. Between July and September, the company took down 7.8 million videos, nearly 1.7 million channels and over 224 million comments, and YouTube noted that machine learning continues to play a major role in that effort. "We've always used a mix of human reviewers and technology to address violative content on our platform, and in 2017 we started applying more advanced machine learning technology to flag content for review by our teams," the company said. "This combination of smart detection technology and highly-trained human reviewers has enabled us to consistently enforce our policies with increasing speed."

'AmalGAN' melds AI imagination with human intuition to create art

Don't worry, they only look like the Pokemon of your nightmares. The images you are about to see are, in fact, at the very bleeding edge of machine-generated imagery, mixed with collaborative human-AI production by artist Alex Reben and a little help from some anonymous Chinese artists. Reben's latest work, dubbed AmalGAN, is derived from Google's BigGAN image-generation engine. Like other GANs (generative adversarial networks), BigGAN uses a pair of competing AI: one to randomly generate images, the other to grade said images based on how close they are to the training material. However, unlike previous iterations of image generators, BigGAN is backed by Google's mammoth computing power and uses that capability to create incredibly lifelike images. But more important, it can also be leveraged to create psychedelic works of art, which is what Joel Simon has done with the GANbreeder app. This web-based program uses the BigGAN engine to combine separate images into mashups -- say, 40 percent beagle, 60 percent bookcase. What's more, it can take these generated images and combine (or "breed") them into second-generation "child" images. Repeating this breeding process results in bizarre, dreamlike pictures. Reben's contribution is to take that GANbreeder process and automate as much of it as humanly possible. Per the AmalGAN site, 1. an AI combines different words together to generate an image of what it thinks those words look like 2. the AI then produces variants of those images by "breeding" it with other images, creating "child" images 3. another AI shows the artist several "child" images, measuring his brainwaves and body-signals to select which image he likes best 4. step 2 and 3 are repeated until the AI determines it has reached an optimal image 5. another AI increases the resolution of the image by filling in blanks with what it thinks should exist there 6. the result is sent to be painted on canvas by anonymous painters in a Chinese painting village 7. a final AI looks at the image, tries to figure out what is in it, and makes a title The first two steps are handled by GANbreeder. "As far as I understand it, right now [GANbreeder mixes images] randomly," Reben told Engadget. "So it decides to either increase or decrease the percentages of the two images or add new models. You know, like 5 percent cow, and that'll be one of the images that it shows." Once the system has conceived a sufficient selection of potential pictures, Reben pares down the collection using a separate AI trained to determine how much he likes a specific piece based on his physical reaction to it. "I trained a deep learning system on the body sensors that I was wearing," Reben explains. "I had a program show me both good and bad art -- art that I liked, and art that I didn't like -- and I recorded the data." He then used that data to train a simple neural network to figure out the physiological differences between his reactions. "Basically, it gives you that sort of dichotomous indication of what this art is [to me] from my brain waves and body signals," he continued. "It picks up on EEG; I also have heart rate and GSR. I think I might also add facial-emotion-recognition stuff through my webcam." The selection process varies between image sets, Reben said. Sometimes the "right" picture would appear among the first presented by the AI; others required him to dig through multiple generations of child images to find one he liked. Once he's selected the specific images he plans to include in the official project, Reben has the digital images oil painted onto canvas by anonymous Chinese artists. "The easiest 'why' is because I can't paint," Reben quipped. "Using anonymous Chinese painters is another link in this autonomous system, where my hand is not on the artworks -- just my brain and my eyeballs." Transferring the works to a physical medium also helps sidestep an inherent shortcoming of the BigGAN system: The fact that the images are so resource-heavy to produce, they've yet to be created at a size larger than 512 x 512-pixel resolution. The anonymous artists are "basically using human brain power to upscale that image into a canvas," he said. "So that aspect of it is also interesting because there's gonna be a little bit of human interpretation." Finally, Reben uses Microsoft's CaptionBot AI to create titles for each image. "I thought it was interesting removing more and more of a human from the process," Reben concluded. "I also like seeing what the AI interprets these as ... because it doesn't catch everything." For now, the BigGAN engine doesn't have very many practical applications, and its research paper, which was published in September, is under review for a 2019 AI conference. The system itself has a bit of a counting problem, as evidenced by its continual insistence that clock faces have more than two hands and spiders have anywhere from four to 17 legs, but these idiosyncrasies could prove a boon to artists like Reben and Simon. "One of the things Joel [Simon] is doing... is he would like to turn that tool that website into a tool for creative people," Reben said. Artists would be able to train the system on their own images, not just Google's stock set, experiment with the output levels, and "use it as a way to sort of spark imagination and creativity, which I think is great." If you're interested in getting prints of any of these pieces, check out the Charles James Gallery.